It is a common question that has been asked in all Artificial Intelligence Conference or Discussion Forums. Based on my knowledge, I thought of answering some of these questions: 1.) Image Classification (also called Image Recognition): is the process of creating a thematic image where each pixel is assigned a number representing a class / […]

Read more →Category: Emerging Technologies

Emerging technologies include a variety of technologies such as educational technology, information technology, nanotechnology, biotechnology, cognitive science, psychotechnology, robotics, and artificial intelligence.

Azure Cognitive Services–Experience Image Recognition using Custom Vision (Build an Harrison Ford Classifier)

Custom Vision Service as part of Azure Cognitive Services landscape of pretrained API services, provides you an ability to customize the state-of-the-art Computer Vision models for your specific use case. Using custom vision service you can upload set of images of your choice and categorize them accordingly using tags/categories and automatically train the image recognition […]

Read more →Azure Cosmos DB – TTL (Time to Live) – Reference Usecase

TTL capability within Azure Cosmos DB is a live saver, as it would take necessary steps to purge redudent data based on the configurations you may. Let us think in terms of an Industrial IoT scenario, devices can produce vast amounts of telemetry information, logs and user session information that is only useful until we […]

Read more →Azure Database for MariaDB: Public Preview

During Ignite 2018, Microsoft has announced the availability of Maria DB support in Azure Database services. Today it has been opened for Public Preview for all Azure customers. What is MariaDB? MariaDB is a community-developed fork of the MySQL relational database management system intended to remain free under the GNU GPL.Development is led by some […]

Read more →NDepend–VSTS/Azure DevOps Integration–Part 01

In my previous article I wrote an introductory about NDepend and how it will be useful for Agile Team to ensure code quality. In that article we found how we can use NDepend in a developer machine. Now with this article we will familiarize ourselves in using NDepend in your build automation pipeline in your […]

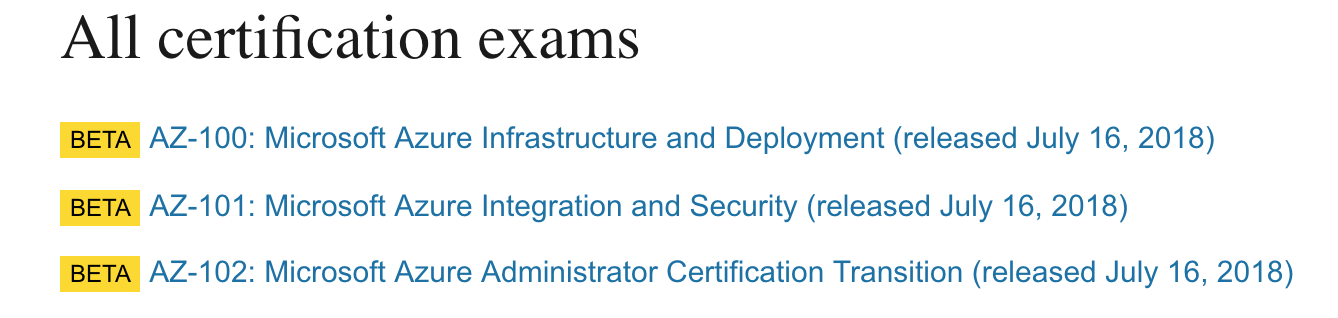

Read more →New Microsoft Azure Certifications

Microsoft has recently announced new certification exam tracks for Azure Administrators, Developers and Architects. Here are the line ups that should help you move your career with right certifications. The three new Microsoft Azure Certifications are: Microsoft Certified Azure Developer Microsoft Certified Azure Administrator Microsoft Certified Azure Architect These certifications would essentially split the previous […]

Read more →